Can AI Detect AI? A Test to Hive Moderation AI-Generated Content Detection tools

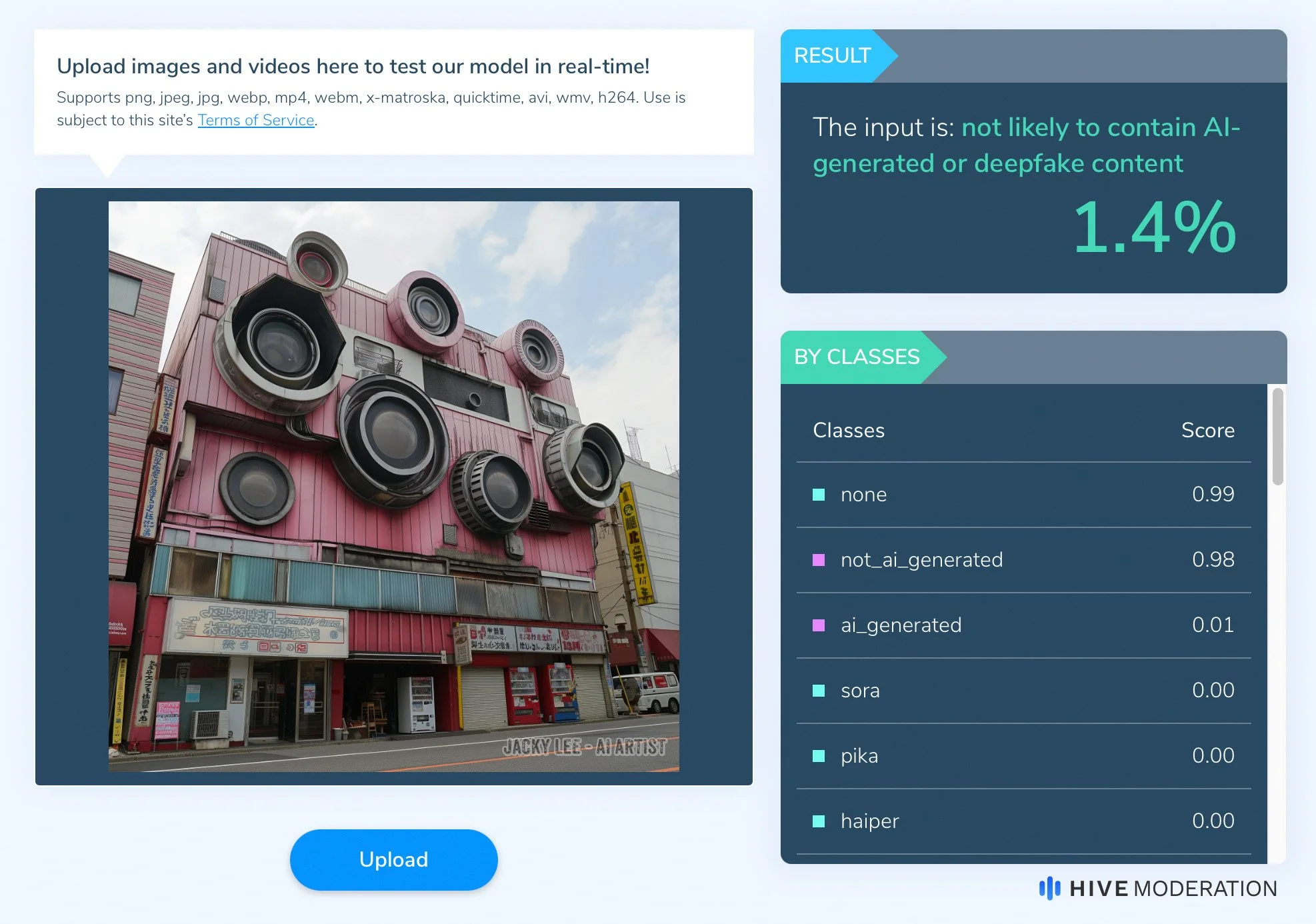

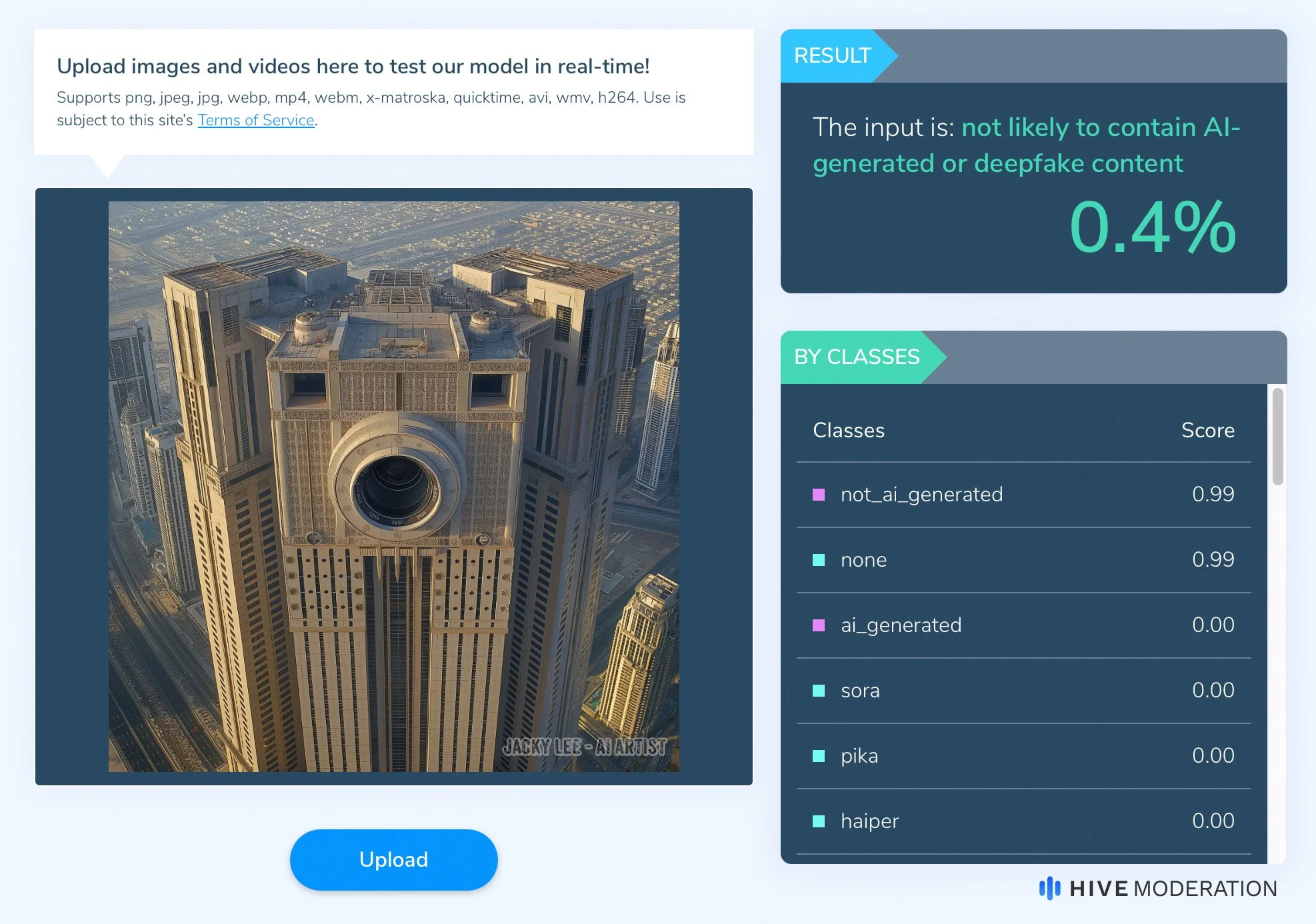

Jacky Lee, our art director and an esteemed photographer known for his exceptional work in landscape and architecture, recently embarked on a fascinating experiment. Using his expertise in AI-generated photography, he tested four of his artworks with the Hive Moderation AI art detector, a tool designed to differentiate between AI-created and human-created images.

The Experiment

The experiment involved a selection of four photographs that Jacky believed were indistinguishable from real images. His aim was to challenge the capabilities of the Hive Moderation AI art detector, exploring the boundaries between AI and human artistic expression.

Exploring Hive's Capabilities

The Hive Moderation AI-Generated Content Detection tool is equipped with three core functions designed to scrutinize different types of media: text, images & videos, and audio. This multifaceted approach allows for a comprehensive analysis of content, ensuring a broad spectrum of AI-generated material can be identified and assessed.

Technical Analysis

In our experiment, we specifically engaged the image and video detection capability of the Hive Moderation tool. This function employs sophisticated algorithms to distinguish AI-generated visuals from those created by human hands. Despite the tool's advanced machine learning technology, the results of our test were surprising, as it failed to recognize the AI origin of the photos presented by Jacky. This outcome raises intriguing questions about the accuracy and reliability of AI art detection technologies.

Ethical Considerations and Fairness in Competitions

A significant concern arises from our findings regarding the use of AI detection tools in settings like photography competitions. If contests explicitly exclude AI-generated content, relying solely on these tools to verify submissions could lead to unfair exclusions if the technology mistakenly identifies human-created images as AI-generated. Conversely, in competitions designed specifically for AI art, the misclassification of AI works as non-AI can unjustly disqualify genuine entries. This scenario underscores the need for fairness and the potential consequences of over-relying on technology that may not yet be foolproof.

Accusations and the Responsibility of Tool Use

The implications extend beyond competitions into the broader artistic community. There is a growing concern about individuals using AI detection tools to challenge the authenticity of artists' works, claiming them to be AI-generated when they are not. These accusations can tarnish reputations and devalue genuine artistic efforts. It is crucial for the community to recognize that no AI detection tool currently offers 100% accuracy. Their use should be approached with caution, and claims should be verified through multiple methods to ensure fairness and maintain trust in the artistic and technological communities.