Navigating the New Frontier: AI-Driven Mental Health Support

Woebot Health founder Alison Darcy | 60 Minute

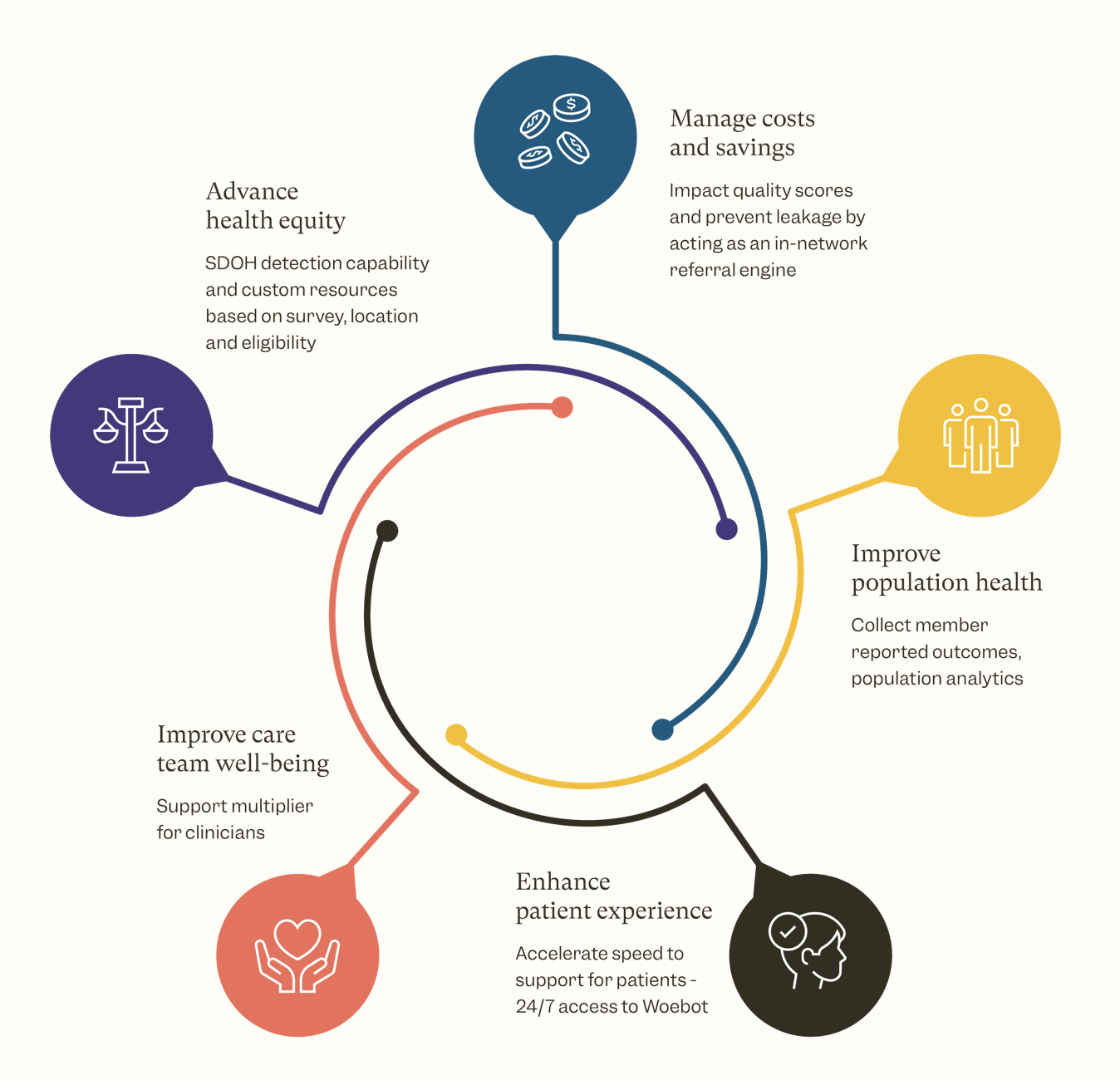

In our fast-paced, technology-driven world, artificial intelligence is reshaping the landscape of mental health support, offering innovative solutions to bridge the gap between the demand for therapy and the availability of human therapists. With a notable shortage of mental health professionals and an increasing number of individuals in need, AI-driven chatbots like Woebot are stepping in as supplementary tools to provide 24/7 support on our smartphones.

Developed by Alison Darcy, a visionary at the intersection of psychology and technology, Woebot represents a pioneering approach to mental health care. It's not just about making therapy more accessible—it's about modernizing the very essence of psychotherapy to fit our digital age. Woebot and similar platforms are designed to manage a range of issues, from depression and anxiety to addiction and loneliness, utilizing cognitive behavioral therapy techniques via text interactions.

Image Credit: https://woebothealth.com

However, the journey towards AI-powered mental health support is not without its challenges. Concerns about the effectiveness, ethical considerations, and the potential for AI to misinterpret or provide harmful advice are valid. The balance between innovation and safety is delicate, as demonstrated by experiences with other chatbots like Tessa, designed to prevent eating disorders but inadvertently provided advice counterintuitive to recovery.

Despite these hurdles, the potential for AI to revolutionize mental health care is undeniable. Platforms like Woebot have already touched the lives of millions, offering a glimmer of hope and support where traditional resources may be scarce or inaccessible. The future of mental health care may indeed lie in the palm of our hands, with AI acting as a bridge to human connection and understanding.

As we navigate this new frontier, it's crucial to proceed with caution, ensuring that AI-driven mental health tools are developed thoughtfully, with robust safeguards to protect users. The goal is not to replace human therapists but to augment the support they provide, ensuring that everyone, regardless of circumstance, has access to the help they need.