LucidSim: The AI-Powered Breakthrough Transforming Robot Training and Generalization

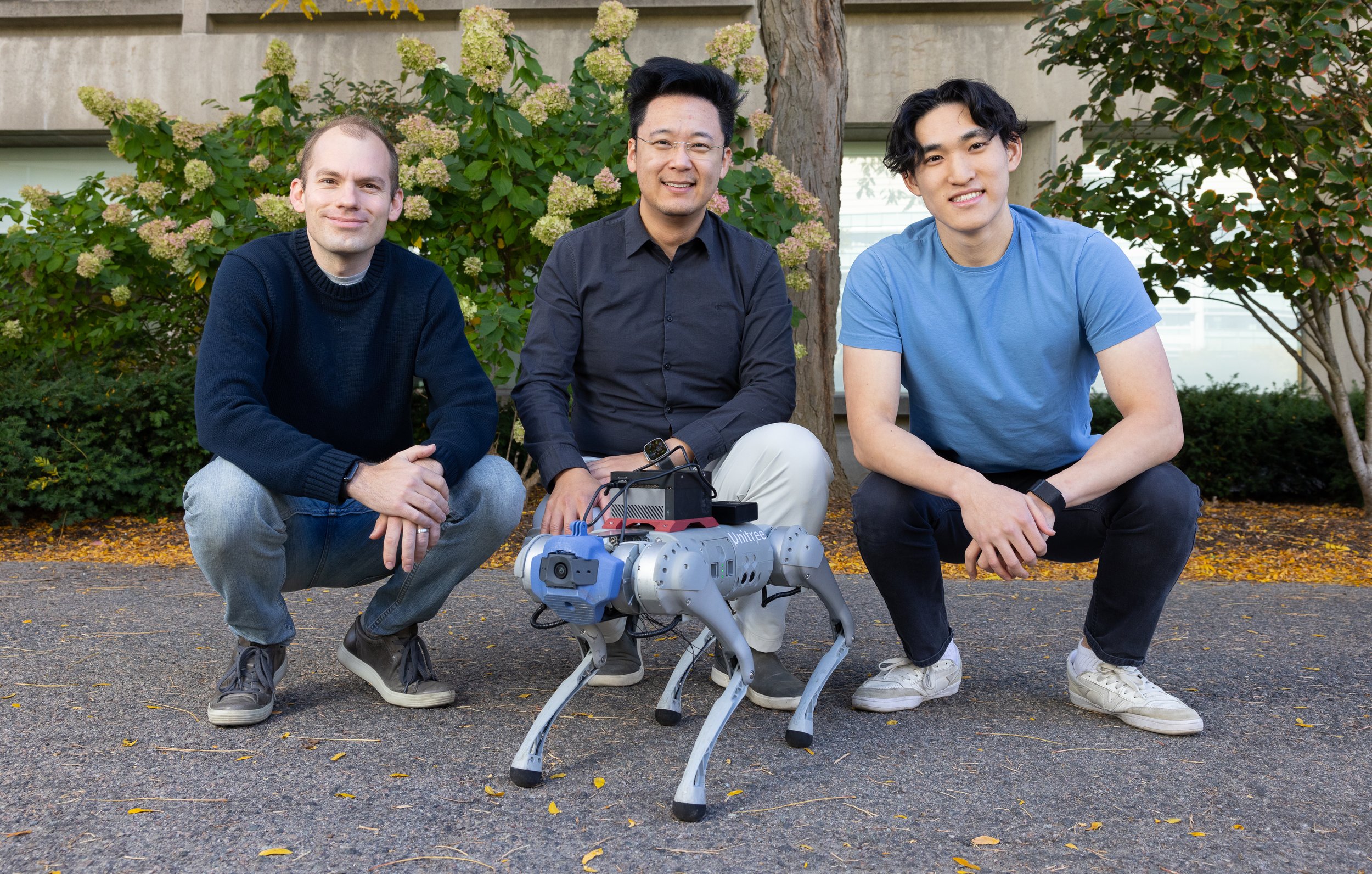

Image Source: MIT CSAIL

Robotics has long faced a towering challenge: generalization—the ability to create machines capable of adapting to unpredictable environments and conditions. Despite advancements from sophisticated programming in the 1970s to deep learning today, a bottleneck persists: the need for high-quality, scalable training data. Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have unveiled a transformative solution to this problem with LucidSim, a cutting-edge system that uses generative AI and physics simulations to train robots in virtual environments with remarkable real-world adaptability.

[Read More: Revolutionizing Protein Engineering: MIT's Computational Breakthrough]

Bridging the 'Sim-to-Real' Gap: How LucidSim Works

One of the most persistent obstacles in robotics is the ‘sim-to-real gap’—the difficulty of transferring skills learned in virtual simulations to the complex and dynamic physical world. Traditional simulations often lack the realism required to prepare robots for real-world conditions. LucidSim addresses this issue by combining physics simulators with generative AI models, creating highly diverse and realistic training environments.

LucidSim uses large language models to generate detailed textual descriptions of environments, which are then transformed into images through generative models. These images are enhanced with depth maps and semantic masks, providing geometric and contextual information. To further immerse robots in realistic scenarios, the system employs a novel technique called Dreams In Motion (DIM), which creates short, coherent videos simulating a robot's perspective in motion.

[Read More: Tesla Unveils Cybercab & Robovan: Musk's Bold Bet on Autonomous Robotaxis by 2026]

The Birth of LucidSim: From Idea to Innovation

The inspiration for LucidSim stemmed from a casual conversation outside a Cambridge taqueria. Alan Yu, an MIT undergraduate, and Ge Yang, a postdoctoral associate, discussed the limitations of existing robot training methods. "We wanted to teach vision-equipped robots how to improve using human feedback. But then, we realized we didn't have a pure vision-based policy to begin with", Yu recalls. This brainstorming session led to the development of LucidSim, a system that balances data diversity and visual realism.

[Read More: MIT’s Future You: AI Chatbot Lets You Talk to Your Older Self]

LucidSim’s Superior Performance in Real-World Tests

The research team tested LucidSim by training robots on challenging tasks, such as navigating stairs, identifying objects, and overcoming obstacles. Results demonstrated its superiority:

Obstacle Navigation: Robots trained with LucidSim achieved an 88% success rate, compared to just 15% using expert-taught methods.

Stair Climbing: LucidSim-trained robots succeeded in all trials, whereas traditional systems struggled.

Data Efficiency: Doubling the training data in LucidSim dramatically improved performance, showcasing its scalability.

This self-directed data collection enabled robots to not only learn faster but also outperform systems trained on human demonstrations, paving the way for more efficient training processes.

A Brief History of Robot Development

The evolution of robotics has been marked by significant milestones:

1921: The term "robot" was introduced by Czech playwright Karel Čapek in his play "R.U.R. (Rossum's Universal Robots)", depicting artificial workers.

1954: George Devol invented the first digitally operated and programmable robot, later known as Unimate.

1961: Unimate was deployed in a General Motors factory, marking the beginning of industrial robotics.

1973: KUKA Robotics developed FAMULUS, one of the first articulated robots with six electromechanically driven axes.

1980s: The integration of robotics into manufacturing processes became widespread. The number of industrial robots in use in the United States grew from about 200 in 1970 to nearly 4,000 by 1980.

2000s: Advancements in artificial intelligence and machine learning led to the development of more autonomous and intelligent robots.

These milestones underscore the importance of continuous innovation in robotics, leading to systems like LucidSim that push the boundaries of machine learning and adaptability.

[Read More: Meet the AI Sniffing Robo-Dog: Transforming Environmental Protection in Hong Kong]

Applications Beyond Quadruped Locomotion

While LucidSim was tested on quadrupedal robots performing parkour-like tasks, its potential extends far beyond. The researchers aim to apply the system to mobile manipulation, enabling robots to handle objects in dynamic environments, such as warehouses or homes. This application requires mastering colour perception and intricate object interactions—domains that still rely heavily on real-world demonstrations.

LucidSim's scalability could revolutionize this field by enabling virtual data collection for thousands of tasks, overcoming the logistical challenges of setting up physical training scenarios.

[Read More: Meet Taiwan’s First AI Robot Dogs: Oliver and Dustin]

Critical Reception and Future Directions

LucidSim has garnered acclaim from experts in robotics and AI. Shuran Song, Assistant Professor at Stanford University, praised the system’s ability to create diverse and realistic data, accelerating the deployment of robots trained in virtual environments. “One of the main challenges in sim-to-real transfer for robotics is achieving visual realism in simulated environments. The LucidSim framework provides an elegant solution by using generative models to create diverse, highly realistic visual data for any simulation. This work could significantly accelerate the deployment of robots trained in virtual environments to real-world tasks”, Song said.

The team’s next steps include training humanoid robots and exploring applications in robotic arms for tasks requiring fine motor skills, such as preparing coffee or assembling intricate objects. These advancements could redefine robotics’ role in industries ranging from manufacturing to service.

[Read More: Beyond Science Fiction: The Real-World Impact of AI-Driven Robots]

Funding and Research Presentation

The development of LucidSim was supported by a wide range of institutions, including the National Science Foundation, the Office of Naval Research, and Amazon. The research was conducted by a collaborative team of CSAIL affiliates, including Ge Yang, Alan Yu, Ran Choi, Yajvan Ravan, John Leonard, and Phillip Isola. The results were presented at the prestigious Conference on Robot Learning (CoRL) earlier this month.

[Read More: When AI Turns Human: Real-Life Woman Poses as Robot at Beijing Expo, Sparks Controversy]

Source: MIT Technology Review, MIT CSAIL, Wikipedia, National Inventors Hall of Fame, ThoughtCo., Kuka, Wevolver