OpenAI Unveils o3: Pioneering Reasoning Models Edge Closer to AGI

Image Source: OpenAI

OpenAI has announced the launch of its latest artificial intelligence system, OpenAI o3, marking a significant advancement in AI reasoning capabilities. The new system, designed to tackle complex problems in mathematics, science, and computer programming, has demonstrated superior performance compared to existing industry-leading AI technologies.

[Read More: 2024’s Top AI Chatbot Developments: Discover the Right One for You]

Image Source: OpenAI

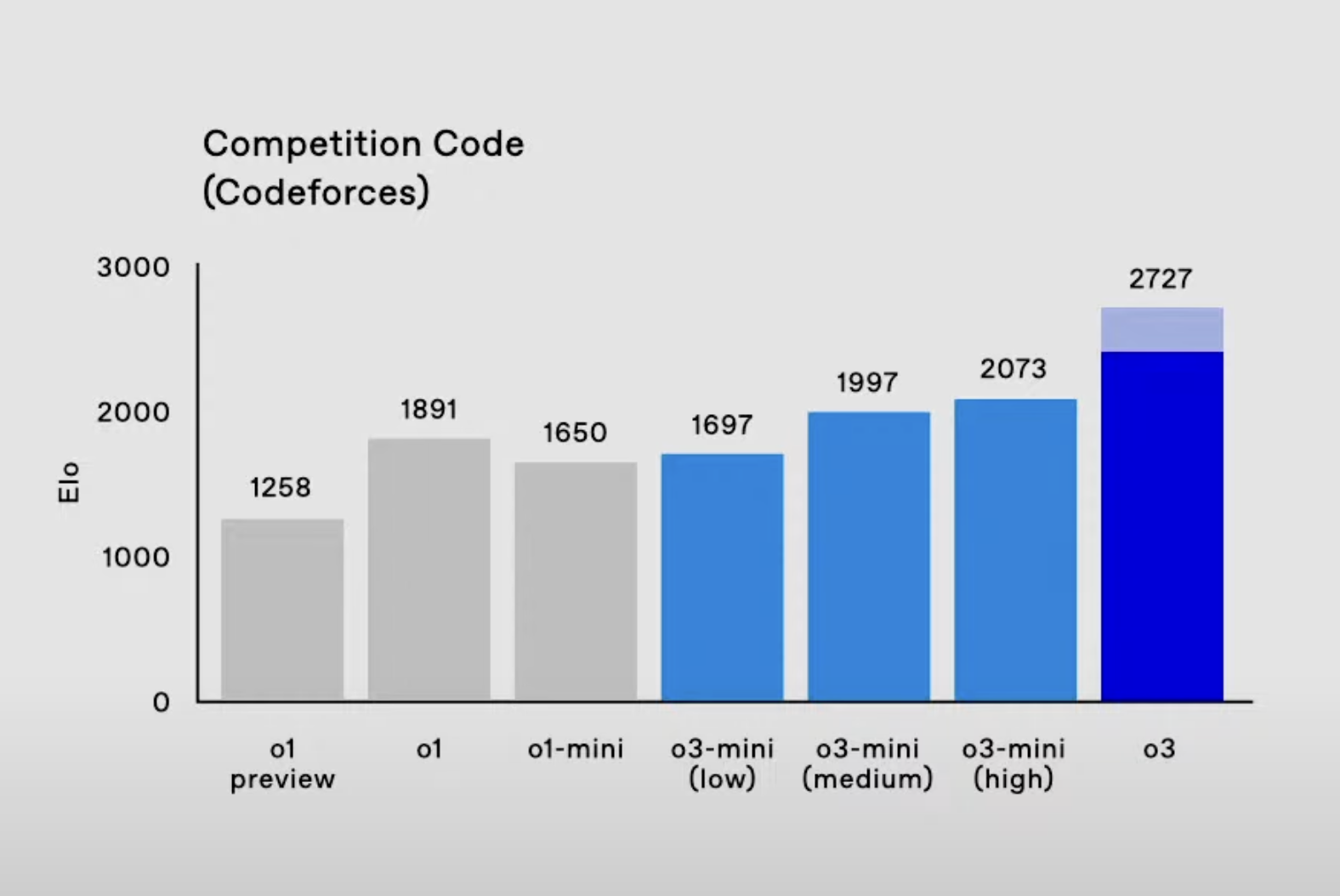

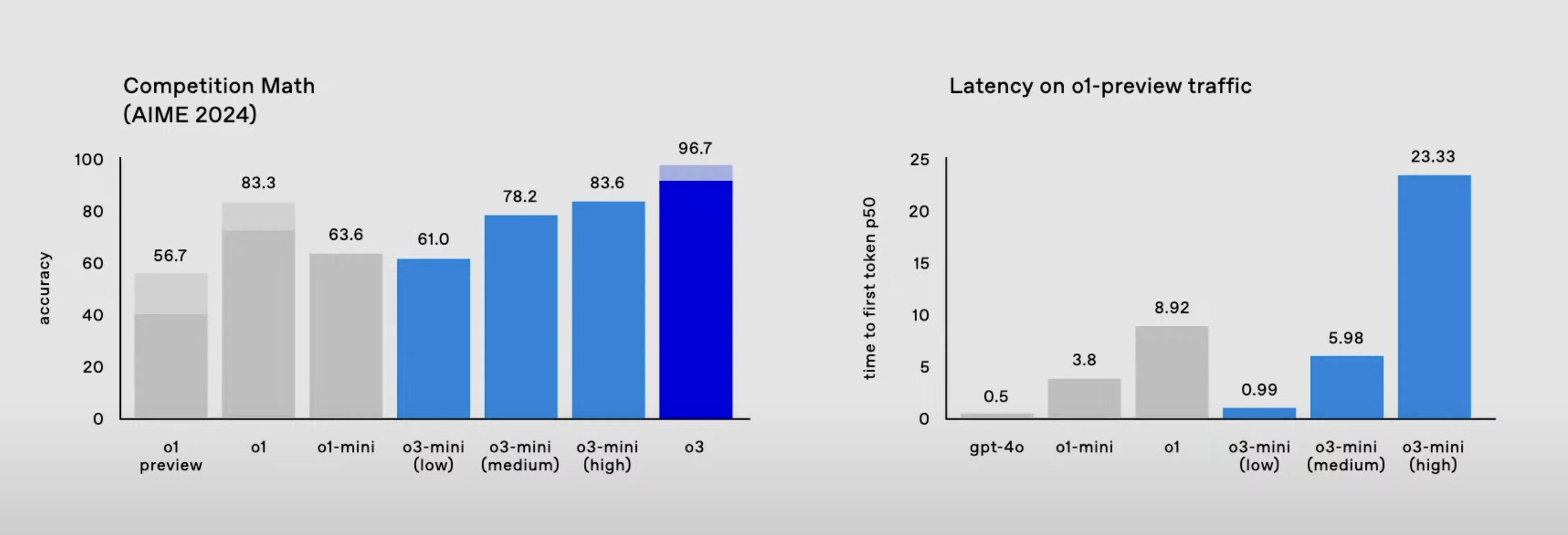

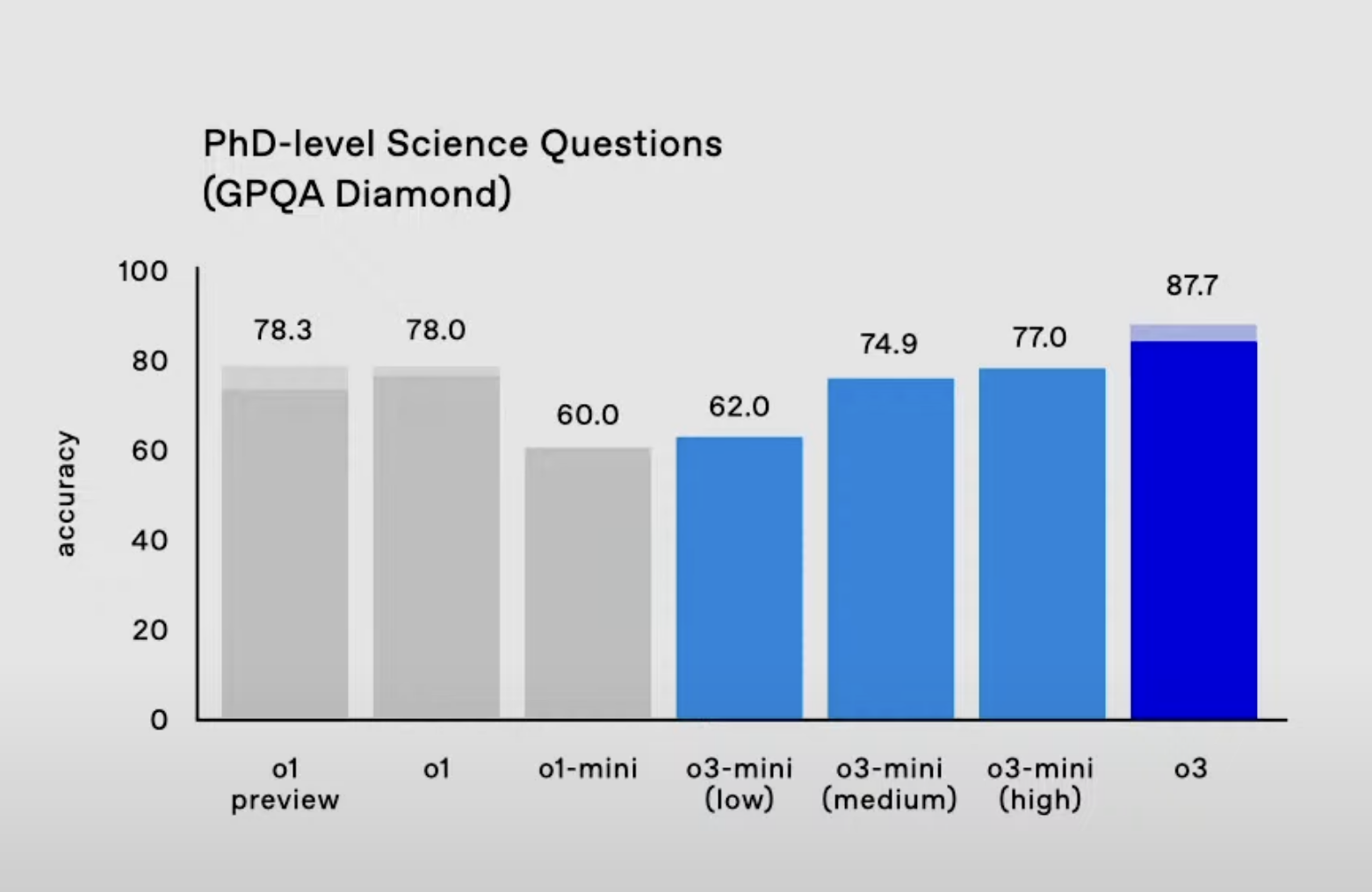

Breakthrough Performance in Reasoning and Programming

During an online presentation, OpenAI CEO Sam Altman highlighted o3's remarkable abilities, stating, "This model is incredible at programming". The company revealed that o3 outperformed its predecessor, o1, by over 20% in common programming tasks. Additionally, o3 surpassed the performance of Jakub Pachocki, OpenAI's chief scientist, in a competitive programming test.

[Read More: AI Breakthrough: OpenAI’s o1 Model Poised to Surpass Human Intelligence]

Image Source: OpenAI

Naming Controversy: The Skip of o2

In an unusual move, OpenAI chose to name its latest model "o3" instead of following the expected sequential naming with "o2". According to reports from The Information, the decision to skip "o2" was motivated by potential trademark conflicts with the British telecom provider O2. CEO Sam Altman confirmed this rationale during a livestream event, highlighting the complexities companies face regarding trademark laws in model naming.

[Read More: OpenAI's New Model - Is GPT-4o Mini Really the Mini?]

Image Source: OpenAI

Enhanced Safety Through Deliberative Alignment

OpenAI introduced a novel training paradigm called "deliberative alignment", which directly teaches AI models the text of human-written safety specifications. This approach enables models like o3 to reason explicitly about these specifications before generating responses. By incorporating chain-of-thought (CoT) reasoning, o3 can reflect on user prompts, identify relevant safety policies, and produce safer, more aligned outputs without relying on human-labeled data.

This method marks a departure from previous alignment techniques such as Reinforcement Learning from Human Feedback (RLHF) and Constitutional AI (CAI), which only used safety specifications to generate training labels. Deliberative alignment allows o3 to internally reference safety guidelines, enhancing its ability to navigate complex and borderline scenarios effectively.

[Read More: The Next Leap in AI Reasoning: How Reinforcement Learning Powers OpenAI's o1 Model]

Image Source: OpenAI

Operational Advantages and Limitations

OpenAI o3 employs a reasoning process that involves breaking down problems into manageable components, enabling more reliable solutions in domains like physics, science, and mathematics. However, this advanced reasoning capability results in increased computational requirements and higher operational costs. Users may experience longer response times, typically ranging from seconds to minutes, compared to standard chatbots.

Despite these improvements, o3 is not infallible. While its reasoning component reduces errors and hallucinations, occasional inaccuracies persist, such as mistakes in simple games like tic-tac-toe. OpenAI acknowledges that continuous refinement is necessary to enhance the system's reliability further.

[Read More: Top 10 AI Terms of 2024: Key Innovations Shaping Artificial Intelligence]

Image Source: OpenAI

Path Towards Artificial General Intelligence

OpenAI's o3 has sparked discussions about its potential to approach Artificial General Intelligence (AGI). Defined by OpenAI as "highly autonomous systems that outperform humans at most economically valuable work", AGI represents a significant milestone in AI development. On the ARC-AGI benchmark, o3 achieved an 87.5% score under high compute settings, indicating substantial progress. However, experts like François Chollet caution that o3 still falls short of true AGI, especially in tasks that are straightforward for humans but challenging for AI.

OpenAI is collaborating with the ARC-AGI foundation to develop the next generation of AI benchmarks, aiming to better assess and advance the capabilities of their models.

[Read More: AI is Already Out? AGI Will Be on the Stage!]

Competitive Landscape and Industry Response

The release of o3 comes amid a surge of similar reasoning models from other AI companies, including Google's Gemini 2.0 Flash Thinking Experimental and DeepSeek's DeepSeek-R1. Alibaba's Qwen team has also introduced an open challenger to o1, emphasizing the competitive momentum in developing advanced reasoning AI.

Despite the promising advancements, some industry observers question the sustainability and cost-effectiveness of reasoning models. The substantial computational power required to operate models like o3 raises concerns about scalability and accessibility for broader applications.

[Read More: OpenAI's GPT Series from GPT-3.5 to GPT-4o]

OpenAI's Commitment to AI Safety and Research Collaboration

Recognizing the heightened risks associated with more capable AI systems, OpenAI is actively investing in AI safety research. The company is launching an early access program for safety researchers to explore the implications of frontier models like o3. This initiative complements existing safety measures, including internal testing, external red teaming, and partnerships with AI safety institutes.

OpenAI encourages the research community to develop robust evaluations, demonstrate high-risk capabilities in controlled environments, and contribute to threat modeling and security analysis. By fostering collaboration, OpenAI aims to ensure that as AI systems become more powerful, they remain aligned with human values and societal norms.

[Read More: Superintelligence: Is Humanity's Future Shaped by AI Risks and Ambitions?]

Leadership Changes Amidst Technological Advancements

In a notable development, Alec Radford, the lead author behind OpenAI's GPT series, has announced his departure from the company to pursue independent research. Radford's exit comes at a pivotal time as OpenAI continues to push the boundaries of AI capabilities with models like o3 and o3-mini. The future trajectory of OpenAI's research and development efforts remains a point of interest for the AI community.

[Read More: OpenAI’s Voice Engine: Revolutionizing Communication or Opening Pandora’s Box?]

Future Availability and Deployment Plans

OpenAI plans to roll out o3 to individuals and businesses in early 2025. The model family includes o3 and o3-mini, a smaller variant optimized for specific tasks. While o3-mini will be available for preview to safety researchers starting December 20, 2024, the full release of o3 is expected by the end of January 2025. These models will be accessible through OpenAI's services, with subscription plans tailored to professional and enterprise users.

[Read More: ChatGPT Pro vs. Plus: Is OpenAI's $200 Plan Worth the Upgrade?]

Source: OpenAI, The New York Times, Yahoo! Finance