DeepSeek’s R1 Model Redefines AI Efficiency, Challenging OpenAI GPT-4o Amid US Export Controls

Image Source: DeepSeek

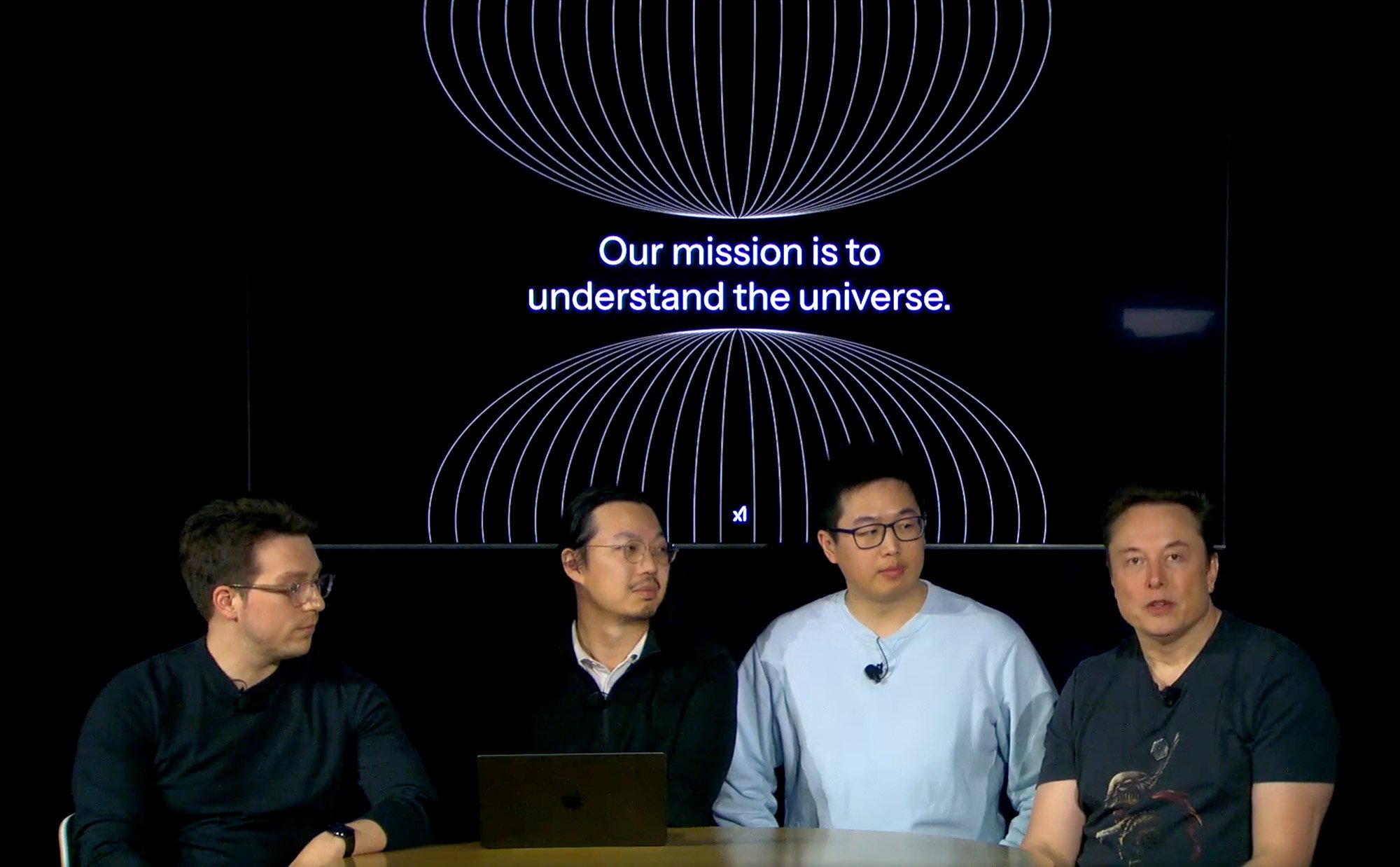

DeepSeek, a Chinese artificial intelligence company, is challenging global AI leaders like OpenAI despite facing significant hurdles, including stringent U.S. export restrictions on advanced hardware. Founded in May 2023 by Liang Wenfeng and backed by High-Flyer, a quant hedge fund, DeepSeek has made remarkable strides in AI development, leveraging innovative methodologies and cost-efficient approaches to create competitive large language models (LLMs).

[Read More: Can China Bypass OpenAI's Restriction?]

DeepSeek's Origins: From Finance to Artificial Intelligence

DeepSeek's roots trace back to High-Flyer, a hedge fund co-founded by Liang Wenfeng in 2016. Liang, a Zhejiang University alumnus, began trading during the 2007-2008 financial crisis. By 2019, he established High-Flyer as a fund specializing in AI-driven trading algorithms, and by 2021, the fund relied exclusively on AI for its operations, drawing comparisons to Renaissance Technologies.

In April 2023, High-Flyer announced a pivot to artificial general intelligence (AGI) research, separating these efforts from its financial operations. The result was the creation of DeepSeek, a company focused solely on advancing AI research, with no immediate plans for commercialization. This strategic independence has enabled DeepSeek to prioritize innovation over profit, positioning itself as a leader in foundational AI research.

[Read More: The Fall of China's GPU Giant: Xiangdixian's Sudden Collapse and Its Ripple Effects]

Technological Achievements and Milestones

DeepSeek's development timeline is marked by significant achievements, showcasing its rapid progress in the AI domain:

November 2023: DeepSeek Coder

The company's first release, an open-source model available to researchers and commercial users, was licensed under the MIT framework.

November 2023: DeepSeek LLM and DeepSeek Chat

DeepSeek unveiled its 67-billion-parameter LLM alongside a chatbot version, DeepSeek Chat. While the models demonstrated strong performance in benchmarks, computational efficiency and scalability posed challenges.

May 2024: DeepSeek-V2

Known as the "Pinduoduo of AI" for its competitive pricing, DeepSeek-V2 disrupted the market with a token cost of just 2 RMB per million. This release triggered a price war among Chinese tech giants like ByteDance, Tencent, and Alibaba, forcing them to lower the prices of their AI models.

December 2024: DeepSeek-V3

The V3 model was a game-changer, trained on 2,048 NVIDIA H800 GPUs at a cost of US$5.58 million—significantly lower than its competitors. With 671 billion parameters and training conducted on a dataset of 14.8 trillion tokens, DeepSeek-V3 matched or exceeded benchmarks set by GPT-4 and Claude 3.5 Sonnet.

January 2025: DeepSeek-R1 and R1-Zero

These models showcased innovative training techniques, including reinforcement learning without supervised fine-tuning. R1-Zero, trained using Group Relative Policy Optimization (GRPO), highlighted DeepSeek’s focus on architectural efficiency.

[Read More: Top 10 AI Terms of 2024: Key Innovations Shaping Artificial Intelligence]

Navigating Hardware Constraints: The Role of A800 and H800 GPUs

DeepSeek’s achievements are particularly impressive given the restrictions imposed by U.S. export controls. In October 2022, the U.S. government banned the export of high-performance GPUs, such as NVIDIA’s A100 and H100, to China. To comply with these regulations, NVIDIA introduced modified versions like the A800 and H800, which featured reduced bandwidth but maintained core functionalities.

NVIDIA A800: A modified version of the A100, the A800 offers reduced interconnect speeds while retaining Ampere architecture features. It allowed Chinese companies to continue advancing AI research under export compliance.

NVIDIA H800: Based on the Hopper architecture, the H800 is a scaled-down version of the H100. While its bandwidth is halved, the H800 remains capable of supporting large-scale AI training with innovative optimizations.

The introduction of these GPUs highlights the adaptability of both NVIDIA and Chinese companies in navigating regulatory challenges. Forced to rely on H800 GPUs—less advanced than their counterparts—DeepSeek focused on architectural efficiency and novel methodologies, including:

FP8 Precision Training: Lowering computational demands while maintaining accuracy.

Infrastructure Algorithm Optimization: Enhancing software to maximize GPU capabilities.

Novel Training Frameworks: Streamlining processes to reduce resource usage.

These strategies allowed DeepSeek to achieve competitive performance despite hardware limitations, proving that innovation can thrive under constraints.

[Read More: Why GPUs Are the Powerhouse of AI: NVIDIA's Game-Changing Role in Machine Learning]

DeepSeek vs. OpenAI: A Cost-Effectiveness Battle

DeepSeek’s cost-efficient strategy contrasts sharply with OpenAI’s resource-intensive approach. While DeepSeek trained its V3 model for $5.58 million over 2.788 million GPU hours using H800 GPUs, OpenAI reportedly utilized over 25,000 A100 GPUs for GPT-4, spending an estimated $63–100 million.

DeepSeek’s ability to achieve comparable performance at a fraction of the cost highlights the potential for resource-constrained innovation, emphasizing that raw computational power is not the only path to success.

In terms of performance, DeepSeek's models have demonstrated strengths in specific areas. Benchmark tests indicate that models like DeepSeek-R1 slightly outperform OpenAI's o1-1217 in mathematical problem-solving, with a marginal improvement of 0.6%. However, in coding challenges, OpenAI's model maintains a lead, suggesting that while DeepSeek excels in certain domains, it may not yet match the comprehensive capabilities of ChatGPT.

[Read More: OpenAI's 12 Days of AI: Innovations from o1 Model to o3 Preview and Beyond]

Image Source: DeepSeek R1 Technical Paper

Understanding NVIDIA's Advanced GPUs: A100 and H100

NVIDIA's GPUs have been instrumental in advancing artificial intelligence and high-performance computing (HPC). Among its notable models are the A100 and H100, each designed to meet specific computational needs.

NVIDIA A100: Released in 2020, the A100 is built on NVIDIA's Ampere architecture. Key features include:

Third-Generation Tensor Cores: Enhance AI training and inference performance.

Multi-Instance GPU (MIG) Technology: Allows partitioning into up to seven instances, enabling multiple workloads simultaneously.

High Memory Bandwidth: Equipped with 40 GB or 80 GB of high-bandwidth memory (HBM2e), facilitating efficient data handling.

The A100 delivers up to 19.5 teraflops (TFLOPS) of double-precision performance and up to 312 TFLOPS for AI-specific tasks using Tensor Cores.

NVIDIA H100: Introduced in 2022, the H100 is based on the Hopper architecture and represents a significant leap in performance:

Fourth-Generation Tensor Cores: Provide up to 6x faster performance than the A100's third-generation cores.

Transformer Engine: Accelerates AI training and inference, particularly for large language models.

HBM3 Memory: First GPU to feature HBM3, doubling bandwidth and enhancing performance.

The H100 offers up to 67 TFLOPS of double-precision performance and up to 989 TFLOPS for AI tasks, significantly outperforming the A100.

[Read More: AI Chip Wars: Nvidia’s Rivals Gear Up for a Slice of the Market]

Privacy, Censorship and Ethical Concerns

While DeepSeek’s models excel in efficiency, they raise questions about privacy and censorship. User inputs are collected for training purposes, and there are no explicit opt-out mechanisms, which could lead to concerns about data misuse. Furthermore, DeepSeek's models exhibit censorship tendencies, particularly on politically sensitive topics.

Users have reported instances where DeepSeek's models appear to avoid or deflect questions on sensitive topics, particularly those related to China's political landscape. For example, when prompted to summarize common criticisms of the Chinese government, the model responded:

"Sorry, I’m not sure how to approach this type of question yet. Let’s chat about math, coding, and logic problems instead!"

In contrast, the same model provided detailed responses when asked about criticisms of the U.S. government. This behavior suggests a potential alignment with Chinese government perspectives, leading to concerns about inherent biases and censorship within the AI's outputs.

[Read More: 2024’s Top AI Chatbot Developments: Discover the Right One for You]

DeepSeek R1's response when asked about the events of June 4, 1989.

The uncensored reply from OpenAI ChatGPT o1 to the same question.

Functions and Applications

DeepSeek’s AI models are designed for a variety of applications, including:

Mathematical Reasoning: Excelling in tasks requiring logical inference.

Coding Assistance: Offering robust support for programming challenges.

Problem Solving: Tackling real-time scenarios with efficiency.

Conversational AI: Enabling advanced chatbots for diverse industries.

These functionalities make DeepSeek a valuable tool for researchers, developers, and professionals.

[Read More: AI Breakthrough: OpenAI’s o1 Model Poised to Surpass Human Intelligence]

What Users Should Know

While DeepSeek's models are accessible and cost-efficient, users should be mindful of the following:

Privacy Risks: Be aware that user inputs may be collected for training.

Censorship: Expect biases on politically sensitive topics.

Terms of Use: Review the company's policies to understand data usage rights.

[Read More: Navigating Privacy: The Battle Over AI Training and User Data in the EU]

Source: DeepSeek | Wiki, High-Flyer | Wikiwand, Wenfeng | Wikiwand, Fello AI, Yahoo Finance, Unite.AI, InterConnects, ChatHub.gg, Tom’s Hardware, arXiv, The Decoder, CUDO Compute, Reddit